Today, I want to share with you something that I’ve been working on for the last several months — a concrete vision and proposal for supporting the Internet’s development.

For some, “Internet development” is about building out more networks in under-served parts of the world. For others, myself included, it has always included a component of evolving the technology itself, finding answers to age-old or just-discovered limitations and improving the state of the art of the functioning, deployed Internet. In either case, development means getting beyond the status quo. And, for the Internet, the status quo means stagnation, and stagnation means death.

Twenty-odd years ago, when I first got involved in Internet technology development, it was clear that the technology was evolving dynamically. Engineers got together regularly to work out next steps large and small — incremental improvements were important, but people were not afraid to think of and tackle the larger questions of the Internet’s future. And the engineers who got together were the ones that would go home to their respective companies and implement the agreed on changes within their products and networks.

Time passes, things change. As an important underlay to the world’s day to day activities, a common perspective of the best “future Internet” is — hopefully as good as today’s, but maybe faster. And, many of the engineers have gone on to better things, or management positions. Companies are typically larger, shareholders a little more keen on stability, and engineers are less able to go home to their companies and just implement new things.

If we want something other than “current course and speed” for the Internet’s development, I believe we need to put some thoughtful, active effort into rebuilding that sense of collaborative empowerment for the exploration of solutions to old problems and development of new directions — but taking into account and working with the business drivers of today’s Internet.

Clearly, it can be done, at least for specific issue — I give you World IPv6 Launch.

Apart from that, what kinds of issues need tackling? Well, near term issues include routing security as well as fostering measurements and analysis of the currently deployed network. Longer term issues can include things like dealing with rights — in handling personal information (privacy) as well as created content.

I don’t think it requires magic. It might involve more than one plan — since there never is a single right answer or one size that fits all for the Internet. But, mostly, I think it involves careful fostering, technical leadership, and general facilitation of collaboration and cooperation on real live Internet-touching activities.

I’m not just waving my hands around and writing pretty words in a blog post. Earlier this year, I invited a number of operators to come talk about an Unwedging Routing Security Activity, and in April, we had a meeting to discuss possibilities and particulars. You can out more about the activity, including a report from the meeting here.

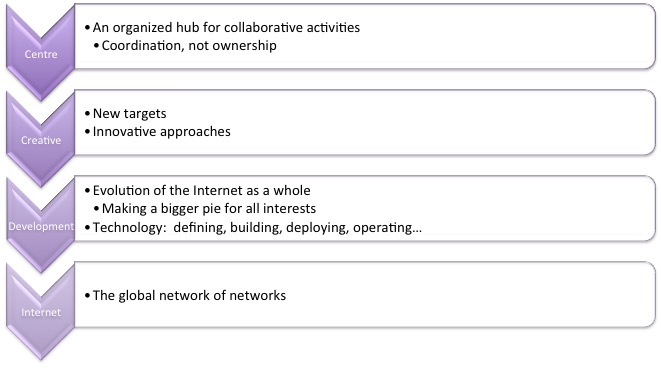

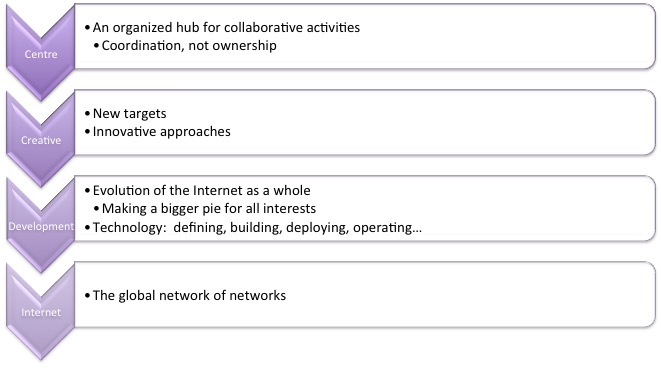

That was a proof point for the more general idea of this “coordination” function I described above — for now, let’s call it the Centre for the Creative Development of the Internet, and you can read more about that here: http://ccdi.thinkingcat.com/ .

In brief, I believe it’s possible to put together concrete activities that will move the Internet forward, that can be sustained by support from individual companies that have an interest in finding a collaborative solution to a problem that faces them. The URSA work is a first step and a proof point.

Now the hard part: this is not a launch, because while the idea is there, it’s not funded yet. I am actively pursuing ways to get it kick started, with to be able to make longer term commitments to needed resources, and get the idea out of the lab and working with Internet actors.

If you have thoughts or suggestions, I’m happy to hear them — ldaigle@thinkingcat.com . Even if it’s just a suggestion for a better name :^) .

And, if we’re lucky, the future of Internet development will mirror some of its past, embracing new challenges with creative, collaborative solutions.