RIP, Privacy? I certainly hope not. The dictionary.com definition of privacy includes “the state of being free from intrusion or disturbance in one’s private life or affairs “. In that light, the suggestion that “you have zero privacy anyway, get over it” (attributed to Scott McNealy) is kind of scary: if we’re trying to make the world a better place, we should have fewer intrusions and disturbances in our private life, rather than expecting that they should be the norm.

What we’ve seen over the last decade is an explosion in the exposure of personal data, due to:

- data being shared in electronic form (especially, via the Internet)

- cheap computer storage making it feasible to collect and retain massive quantities of data

- cheap, fast computing that facilitates processing the masses of data to draw correlations and inferences

While privacy has traditionally been achieved through confidentiality of data, the factors above have outstripped our rational ability to develop appropriate means to act given how little data exposure it takes in order to lead to an impact on privacy.

In early June, I gave a talk to a George Mason University class, “Privacy and Ethics in an Interconnected World” in the Applied Information Technology department. The assigned subject of the talk was “Regulating Internet Privacy”.

In preparing for the talk, I want through 5 “case studies” of data exposure and privacy impacts:

So-called Public Data: E.g., is it okay that everyone knows how much you paid for your house?

- At least in some jurisdictions, it is “public data”

- It does help inform future buyers in the area

But — it can create tense times with your family, friends and neighbours, which is most certainly an intrusion on your personal life.

And then along comes Zillow and takes individual pieces of public data to paint a picture of your neighbourhood, which paints a whole different picture of you, your worth, etc.

Personal Data in Corporate Hands: Your usage at a particular service means you wind up sharing personal data.

- In part, this is an inevitable consequence of carrying out a business transaction.

- An argument is that it helps tailor your service to your interests.

But, when a history is maintained, your usage is tracked, and data is sold to third parties, the implications may catch you by surprise. (Should you be denied health care coverage if you don’t walk 10,000 steps a day?).

Identity Data: Data that identifies you personally undermines any opportunity for anonymity.

Freedom of speech means different things in different parts of the world, and being able to voice an opinion without fear of repercussion follows with that

Accountability: On the flip side, some ability to attach actions to individuals who are responsible for them.

- When someone does something bad on or to the Internet, it should be possible to track them down

- Of course, “something bad” is in the eye of the beholder

This is, of course, the complement of the desire to be able to provide anonymity.

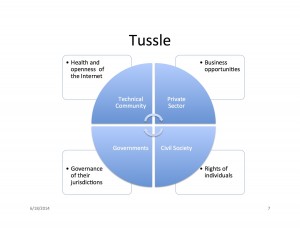

Pervasive Monitoring: collecting any and all data about Internet connections, irrespective of source or destination or accountable person.

- Governments demanding access –to metadata of Internet connections, and sometimes content.

- From reports, they have no a priori reason to track you, but it’s easier to collect all the data and figure out what they want later

But, inferences from data mining are not always correct, and if you don’t know they are being made, you have no recourse to fix them.

One thing that all of the points above have in common is that the data is being used for purposes beyond which people originally understood or expected it to be.

It’s pretty hard to function in today’s society without sharing some data some of the time — so complete confidentiality is not an effective option. But being cautious about what you share, when, is necessary in this day and age. The Internet Society has some useful guidance on that front:

And the other side of the coin is making sure that the data that is shared is treated appropriately.

And we should, indeed, be able to “rest in peace” — peace of mind that our lives are not being undermined by misuse of our data.